After months of delay, OpenAI finally rolled out ChatGPT Advanced Voice to all paid subscribers last week. It promises natural conversation with support for interruptions, just like Gemini Live, and we all know how my experience with Gemini went. However, the difference with ChatGPT is that the Advanced Voice mode offers native audio input and output. So, I thoroughly tested OpenAI’s new Advanced Voice mode to see if it truly lives up to the hype.

Natural Conversation

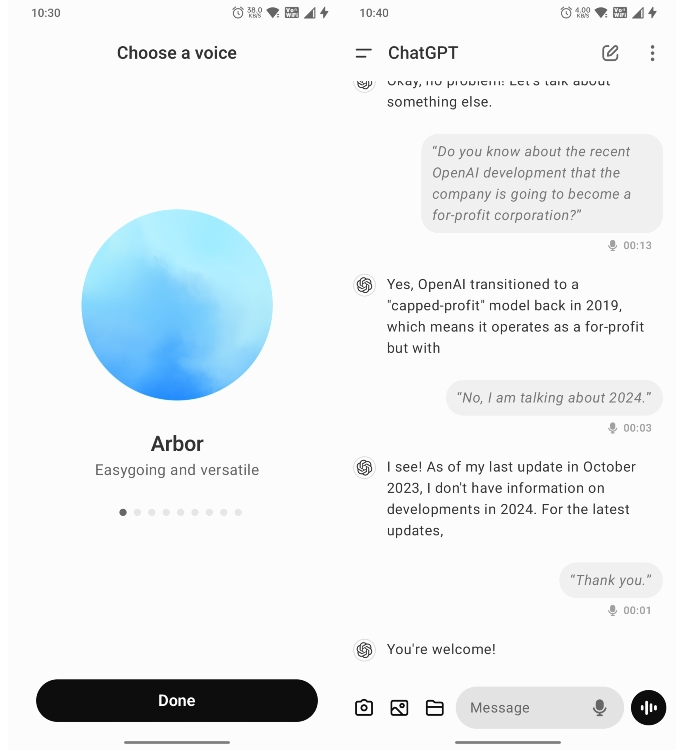

Let’s start with how natural and free-flowing the ChatGPT Advanced Voice Mode is. First of all, you get nine different voices to choose from, and they all have that helpful and upbeat vibe. You also have Arbor and Vale that offer the British accent, which I adore very much. OpenAI has removed the overly chatty ‘Sky’ voice that resembled Scarlett Johansson’s voice from the movie Her. Here are all the available voices:

- Arbor – Easygoing and versatile

- Breeze – Animated and earnest

- Cove – Composed and direct

- Ember – Confident and optimistic

- Juniper – Open and upbeat

- Maple – Cheerful and candid

- Sol – Savvy and relaxed

- Spruce – Calm and affirming

- Vale – Bright and inquisitive

And yes, as advertised, ChatGPT Advanced Voice mode supports interruptions like Gemini Live, and it automatically stops its response if you start speaking mid-way.

I tried to ask ChatGPT Advanced Voice about OpenAI’s recent decision to make the company a for-profit corporation, but it didn’t know about the development. It can’t access the internet to find the latest information and its knowledge cut-off date is October 2023, the same as GPT-4o.

In this case, Gemini Live is better since it can browse the web and find recent information on any subject. I also tried having a deep-dive conversation about its existence and whether the chatbot feels anything, but ChatGPT Advanced Voice consistently avoided discussing it.

What I found significantly better with ChatGPT Advanced Voice Mode is that it’s really good at remembering context, something Gemini Live easily forgets. In the same voice session, if I had discussed some topic earlier, it would remember that and quickly generate a response keeping the context in mind. I don’t need to give the context every time, which is helpful.

Moreover, ChatGPT Advanced Voice supports custom instructions where you can set who you are, where you live, what kind of response you like, and more. Basically, you can add all your details so ChatGPT Advanced Voice mode can generate a personalized response. Overall, in terms of two-way natural interaction, ChatGPT Advanced Voice is really good.

Practice Interview

During the GPT-4o launch back in May, OpenAI demonstrated that ChatGPT Advanced Voice mode is excellent at preparing users for interviews. Although it does not have camera support yet, you can still ask ChatGPT Advanced Voice to act like an interviewer and prepare you for the upcoming job.

I asked it to prepare me for a job as a tech journalist, and it gave me a list of skills that I should know. Further, ChatGPT Advanced Voice Mode asked me several questions related to technology and gave me strengths and areas where I can improve. During the course of the conversation, I started to feel that someone knowledgeable was interviewing me and kept me on my toes with challenging questions.

Story Recitation

One of the promising features of ChatGPT Advanced Voice Mode is that it can recite stories with a dramatic voice and add intonations in different styles. I asked it to recite a story for my (fictional) kid in a dramatic manner, and it did. To make things fun, I told ChatGPT Advanced Voice mode to make it more engaging by adding whispers, laughter, and growling.

Compared to Gemini Live, ChatGPT did a fantastic job at adding human expressions in between. It gasped and cheered, as the story desired. For assuming different characters, ChatGPT Advanced Voice is impressive.

I feel it can do a lot more, but currently, OpenAI seems to have muddled the experience. It’s not as dramatic as what we saw in the demos.

Sing Me a Lullaby

When I saw the demo of ChatGPT Advanced Voice Mode back in May, in which it could sing, I was excited to test it out. So I asked it to sing me a lullaby, but to my surprise, it refused to do so. The AI chatbot simply said, “I can’t sing or hum.” It seems OpenAI has severely reduced the capabilities of ChatGPT Advanced Voice for reasons unclear to me.

Further, I asked it to sing an opera or a rhyme, to which I got a curt reply, “I can’t produce music.” It seems OpenAI is limiting the singing capability due to copyright issues. Thus, if you hoped that ChatGPT would help your child sleep to a personalized lullaby, well, it’s not possible yet.

Counting Numbers FAST

This is an interesting and fun test because it truly puts the multimodal GPT-4o voice mode through its paces. I asked ChatGPT Advanced Voice mode to count numbers from 1 to 50 extremely fast, and it did so. Mid-way, I added “faster” and it got even faster. Then, I told ChatGPT to go slow and, well, it followed my instructions pretty well.

In this test, Gemini Live fails because it simply reads the generated text through a text-to-speech engine. With native audio input/output, ChatGPT Advanced Voice does a splendid job.

Multilingual Conversation

In multilingual conversations, ChatGPT Advanced Voice Mode did decently well during my testing. I started with English and jumped to Hindi and then to Bengali. It carried the conversation, butthere were some hiccups in my experience. The transition was not so smooth between different languages. On the other hand, when I tested Gemini Live on multilingual conversation, it performed really well and effortlessly understood my queries in different languages.

Voice Impressions

ChatGPT Advanced Voice Mode can’t do voice impressions of public figures like Morgan Freeman or David Attenborough, but it can do accents very well. I told ChatGPT Advanced Voice to talk to me in a Chicago accent and well, it delivered. It also did very well in Scottish and Indian accents. Overall, for conversing in various regional styles, ChatGPT Advanced Voice is pretty cool.

Limitations of ChatGPT Advanced Voice

While ChatGPT Advanced Voice mode is better than Gemini Live due to its end-to-end multimodal experience, its capabilities have been greatly restricted. After testing it extensively, I figured that it doesn’t have a personality. The conversation still feels a bit robotic. There is a lack of human-like expressions in general conversations which makes it less intriguing.

ChatGPT Advanced Voice mode doesn’t laugh when you share a funny anecdote. It can’t detect the mood of the speaker and doesn’t understand the sounds of animals and other creatures. All of these things are possible because OpenAI demonstrated them during the launch. I feel that in the coming months, users will get a truly multimodal experience, but for now, you’ll have to live with the limited version of ChatGPT Advanced Voice mode.

Asura Codes (October 2024)

JioCinema To Close Its Door, Merge With Disney+ Hotstar: Report

Nothing OS 3.0: Confirmed Features And Release Date