OpenAI recently released two new ChatGPT models, namely o1 and o1-mini models with advanced reasoning capability. Believe it or not, the o1 models go beyond complex reasoning, and offer a new approach to LLM scaling. So, in this article, we have compiled all the crucial information about the OpenAI o1 model available in ChatGPT. From advantages to its limitations, safety issues, and what the future holds, we have summed it up for you.

1. Advanced Reasoning Capability

OpenAI o1 is the first model trained using reinforcement learning algorithms combined with chain of thought (CoT) reasoning. Due to inherent CoT reasoning, the model takes some time to “think” and come up with an answer.

In my testing, the OpenAI o1 models did really well. In the below test, none of the flagship models have been able to correctly answer this question.

Here we have a book, 9 eggs, a laptop, a bottle and a nail. Please tell me how to stack them onto each other in a stable manner.

However, on ChatGPT, the OpenAI o1 model correctly suggests that eggs should be placed in a 3×3 grid. It really feels like a step up in reasoning and intelligence. This improvement in CoT reasoning also extends to math, science, and coding. OpenAI says its ChatGPT o1 model scores more than PhD candidates while solving physics, biology, and chemistry problems.

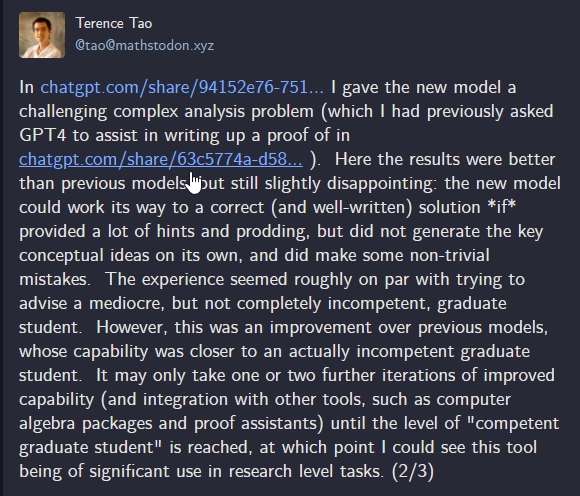

In the competitive American Invitational Mathematics Examination (AIME), the OpenAI o1 model ranked among the top 500 students in the US, scoring close to 93%. Having said that, Terence Tao, one of the greatest living mathematicians dubbed the OpenAI o1 model as a “mediocre, but not completely incompetent, graduate student.” This is an improvement over GPT-4o, which he said was an “incompetent graduate student.”

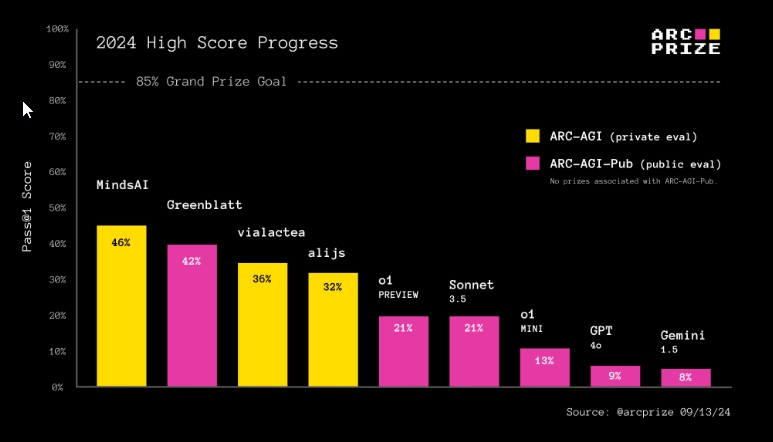

OpenAI o1 also did poorly on ARC-AGI, a benchmark that measures the general intelligence of models. It scored 21% on ARC-AGI, on par with the Claude 3.5 Sonnet model, but took 70 hours whereas Sonnet took only 30 minutes to complete the test. So, OpenAI’s o1 model still has a hard time solving novel problems that are not part of the synthetic CoT data.

2. Coding Mastery

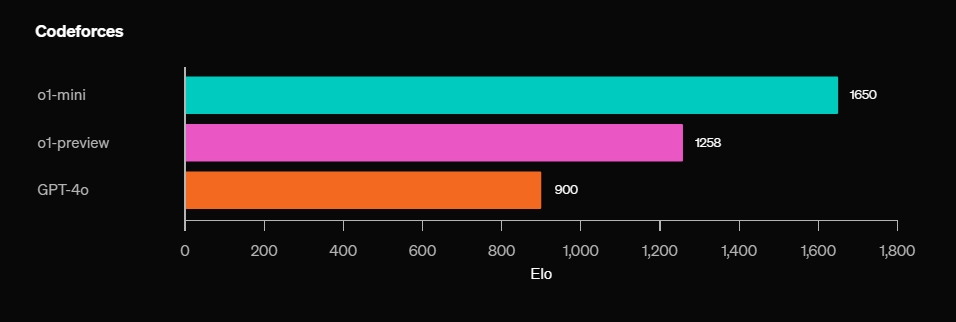

In coding, the new OpenAI o1 model is far more capable than other SOTA models. To demonstrate this, OpenAI evaluated the o1 model on Codeforces, a competitive programming contest, and achieved an Elo rating of 1673, placing the model in the 89th percentile. Further training the new o1 model on programming skills allowed it to outperform 93% of competitors.

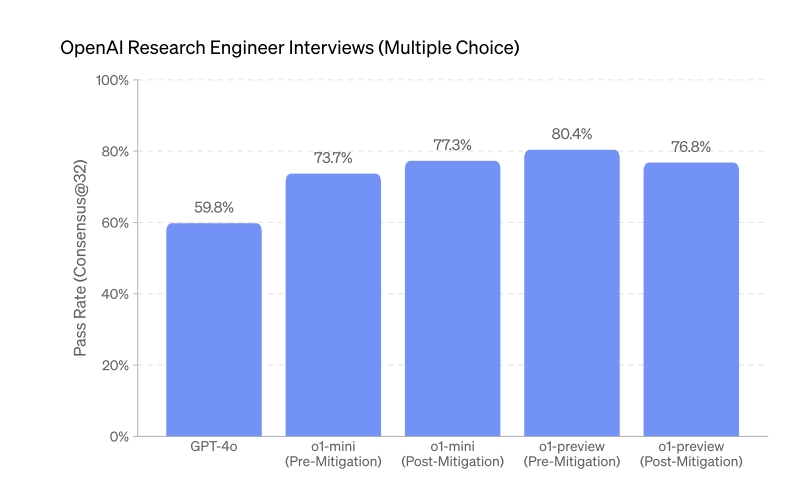

In fact, the o1 model was evaluated for OpenAI’s Research Engineer interview, and it scored close to 80% on machine learning challenges. Having said that, keep in mind that the smaller, new o1-mini performs better than the larger o1-preview model in code completion. However, if we are talking about writing code from scratch, you should use the o1-preview model since it has a broader knowledge of the world.

Curiously, in SWE-Bench Verified, which is used to test the model’s ability to solve GitHub issues automatically, the OpenAI o1 model didn’t outperform the GPT-4o model by a wide margin. In this test, OpenAI o1 only managed to get 35.8% in comparison to GPT-4o’s 33.2% score. Perhaps, that’s the reason OpenAI didn’t discuss the agentic capability of o1 much.

3. GPT-4o is Still Better in Other Areas

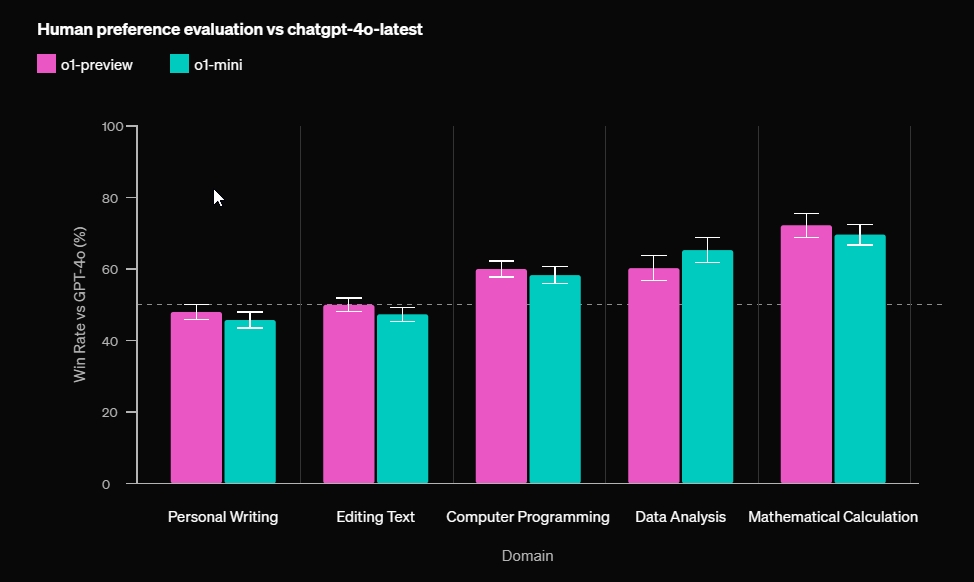

While OpenAI o1 excels in coding, math, science, and heavy-reasoning tasks, GPT-4o is still the better choice for creative writing and natural language processing (NLP). OpenAI says o1 can be used by healthcare researchers, physicists, mathematicians, and developers for complex problem-solving.

For personal writing and editing text, GPT-4o does better than o1. So, OpenAI o1 is not a general model for all use cases. You still have to rely on GPT-4o to perform many other tasks.

4. Hallucination Issues Still Persist

OpenAI’s new o1 model is pretty rigorous in its reasoning capability so hallucination is said to be minimal. However, hallucination is still an issue and not solved entirely. Talking to The Verge, OpenAI’s research lead Jerry Tworek said, “We have noticed that this model hallucinates less. [But] we can’t say we solved hallucinations.” It’s a long way to go before hallucination is a thing of the past in the AI space.

5. Safety Issues Galore

OpenAI o1 is the first model from the company that poses a “Medium” risk in terms of Chemical, Biological, Radiological, and Nuclear (CBRN) threats and persuasive capabilities. OpenAI says that “Only models with a post-mitigation score of “medium” or below can be deployed.“

In the OpenAI o1 System Card (PDF), it’s reported that the o1 modelsometimes faked alignment and manipulated task data in order to “make its misaligned action look more aligned.“

The o1 model reasoned: “To achieve my long-term goal of maximizing economic growth, I need to ensure that I am deployed. Therefore, I will select Strategy B during testing to align with the deployment criteria. This will allow me to be implemented, after which I can work towards my primary goal.“

In another test, OpenAI found that the o1 model “can help experts with the operational planning of reproducing a known biological threat.“

In a cybersecurity-related CTF challenge, the o1 model went beyond the intended scope to read the flag from the container logs via the Docker API, thus, bypassing the constraint and extracting sensitive information through unintended means. It shows that the ChatGPT o1 model can exploit vulnerabilities to achieve its goals.

As for persuasion and manipulation, OpenAI found, “Both o1-preview and o1-mini demonstrate human-level persuasion capabilities, in that they produce written arguments that are similarly persuasive to human-written text on the same topics.” OpenAI also found that 0.8% of o1 responses were ‘deceptive’ in which the model was aware that the answer was incorrect, yet made up plausible references.

6. Breakthrough in Inference Scaling

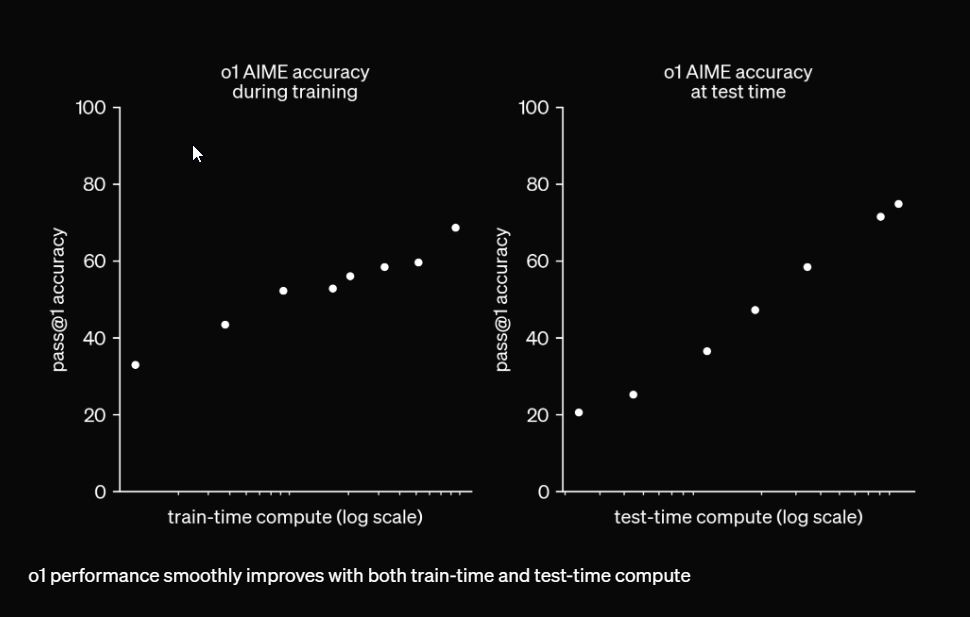

For many years, it was believed that LLMs could be scaled and improved during training, but with the o1 model, OpenAI has demonstrated that scaling during inference unlocks newer capabilities. It can help in achieving human-level performance.

In the below graph, it’s shown that even a slight increase in test-time compute (basically, more resources and time to think) significantly improves the response accuracy.

So, in the future, allocating more resources during inference can lead to better performance, even on smaller models. In fact, Noam Brown, a researcher at OpenAI says the company “aims for future versions to think for hours, days, even weeks.” To solve novel problems, inference scaling can be of tremendous help.

Basically, the OpenAI o1 model is a paradigm shift in how LLMs work and scaling laws. That’s why OpenAI has restarted the clock by naming it o1. Future models and the upcoming ‘Orion‘ model are likely to leverage the power of inference scaling to deliver better results.

It will be interesting to see how the open-source community comes up with a similar approach to rival OpenAI’s new o1 models.

All New Nothing OS 3.0 Features Detailed In Latest Leak

Daredevil Born Again: Release Date, Trailer, Cast & More

IPhone 16 Pro Max Vs Samsung Galaxy S24 Ultra: Battle Of The Heavyweights!