Apple never fails to impress its fans when it comes to accessibility features. The latest iOS 18 brings several new Accessibility features, the most highlighting one being Eye Tracking on the iPhone. Yes, the most remarkable feature of Apple Vision Pro is now available on iPhones and iPads too. With AI-powered eye tracking, iPhone users can navigate & control their devices with just their eyes. How cool is that! The feature looks impressive in theory, and we did a thorough hands-on test to determine if it’s perfect for real-life usage. Whether you have accessibility needs or just wish to try out the new feature, here’s how to turn on and use iPhone Eye Tracking in iOS 18. Let’s begin!

Eye Tracking Supported Devices

It’s worth knowing that Eye Tracking is not available on all devices compatible with iOS 18. Yes, you’ve heard it right. So, even if you’re running the latest iOS 18, eye tracking might not be available on your iPhone. That’s because Apple’s Eye Tracking is only available on iPhone 12 and later models.

Here’s the complete list of devices compatible with iOS 18 Eye Tracking:

- iPhone 12, iPhone 12 Mini

- iPhone 12 Pro, iPhone 12 Pro Max

- iPhone 13, iPhone 13 Mini

- iPhone 13 Pro, iPhone 13 Pro Max

- iPhone 14, iPhone 14 Plus

- iPhone 14 Pro, iPhone 14 Pro Max

- iPhone 15, iPhone 14 Plus

- iPhone 15 Pro, iPhone 15 Pro Max

- iPhone 16, iPhone 16 Plus

- iPhone 16 Pro, iPhone 16 Pro Max

- iPad (10th generation)

- iPad Air (M2)

- iPad Air (3rd gen and later)

- iPad Pro (M4)

- iPad Pro 12.9-inch (5th gen or later)

- iPad Pro 11-inch (3rd generation or later)

- iPad mini (6th gen)

Turn On Eye Tracking in iOS 18

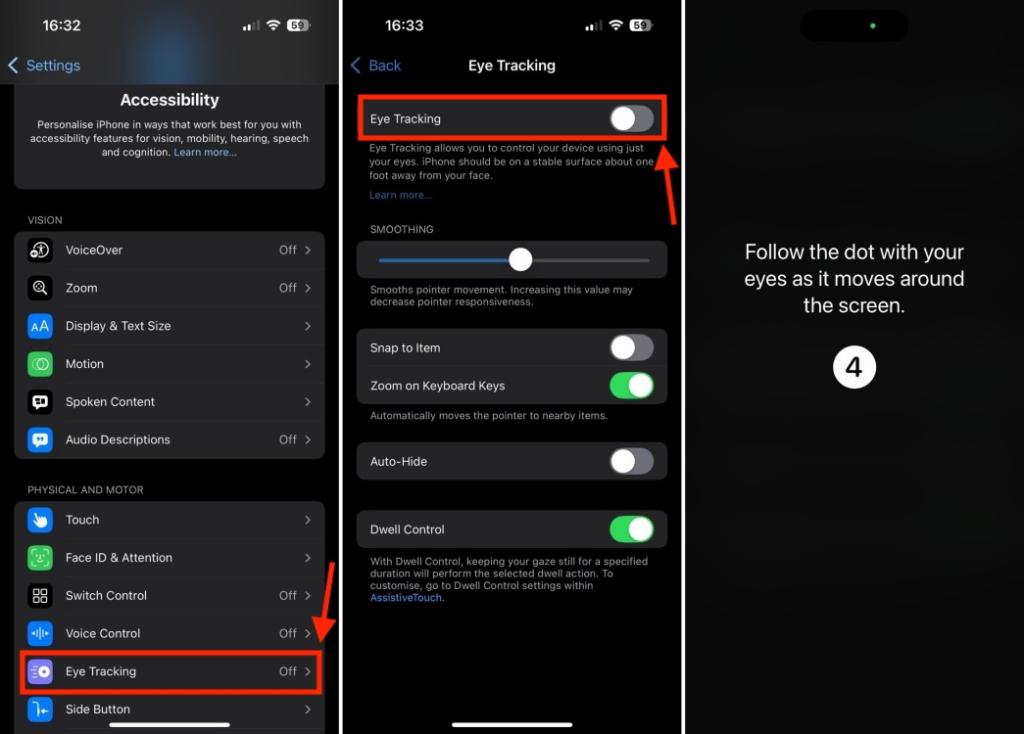

It’s pretty easy to enable the iOS 18 Eye Tracking feature. Also, it takes only a few minutes to set it up. Here are the steps to do it:

- Open the Settings app and visit the Accessibility section.

- Here, swipe down and choose Eye Tracking under the Physical and Motor section.

- On the next screen, turn on the Eye Tracking toggle and follow the on-screen process to set up eye tracking on your iPhone. All you have to do is look at the colored dots one by one.

- For better results, you must set up Eye Tracking with your iPhone or iPad placed on a stable surface and roughly 1.5 feet away from your face. Also, avoid blinking during the process.

Once you’re done, make sure to do the following:

- Turn on Dwell Control. This will help you tap on the highlighted item just by maintaining a gaze for a while.

- Leave the Snap to Item option enabled. It’s an important setting that puts a box around the item you’re currently looking at.

- The Auto Hide option lets you set a duration before the cursor reappears. By default, it is set to 0.50 seconds. You can choose from 0.10 to 4 seconds according to your preferences.

How to Use Eye Tracking on iPhone

Once you’ve enabled eye tracking on your iPhone, an invisible cursor will track and follow your eye movements. When you look at an interactable item on a page or within an app, the system will highlight it and put a rectangular box around it. To tap or select an item on the screen, you just need to keep looking at it for a while, and the chosen action will be performed.

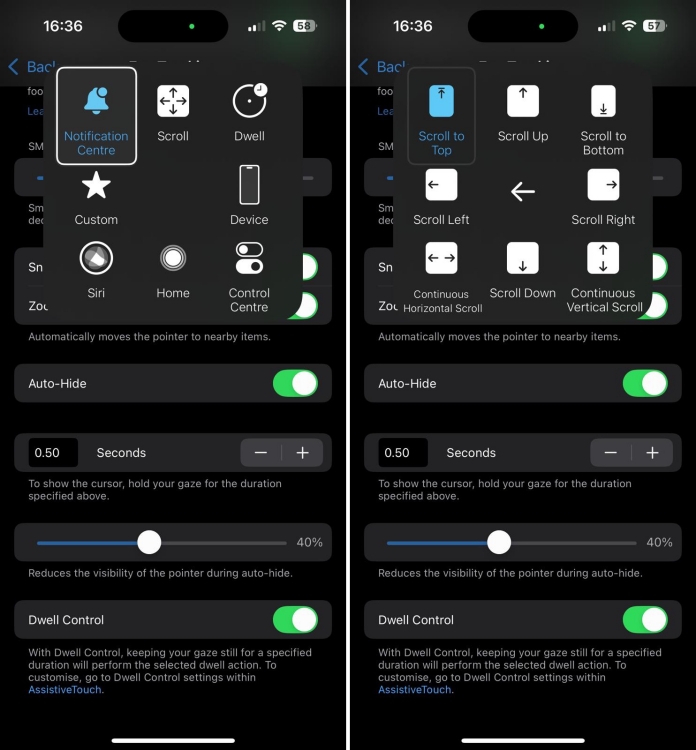

When you turn on eye tracking in iOS 18, it will automatically enable Apple’s AssistiveTouch feature, and these two work pretty nicely together. AssistiveTouch lets you do more things just by looking at your iPhone. You’ll see an AssistiveTouch button, a circle in the bottom right corner, providing quick access to various shortcuts and options that typically require swiping or other gestures. It will show you Scroll options so you can scroll up, down, top, or bottom of the screen. You can also do horizontal or vertical swipes on the screen. Most of the time, these options work pretty accurately.

With AssistiveTouch and Eye Tracking, you can activate Siri, go to Home, lock the screen, reveal Control Center, rotate the screen, adjust the volume, and do much more on your iPhone, just by looking at it.

Set Up Hot Corners with Eye Tracking

We all know how to set up and use Hot Corners on a Mac. With Assistive Touch and Dwell Control options, you can also use Hot Corners on your iPhone and iPad. Interestingly, the latest iOS 18 Eye Tracking feature levels up this experience. When you turn on eye-tracking and hot corners, you can quickly trigger an action just by looking at that corner of the screen. For instance, I’ve set up the top-right corner to go to the Home Screen. By default, Calibrate eye tracking action is assigned to the top-left corner.

Here’s how you can do it:

Note: Before you begin, make sure you’ve enabled the Assistive Touch and Dwell Control options.

- On your iPhone or iPad, go to Settings -> Accessibility -> Touch.

- Here, choose AssistiveTouch and tap on Hot Corners.

- You can now assign your preferred actions to the Top Left, Top Right, Bottom Left, and Bottom Right of the screen.

How Accurate Is Eye Tracking on iPhones?

Eye Tracking works incredibly well on the Apple Vision Pro, all thanks to the advanced LEDs and infrared cameras. However, Apple’s eye tracking isn’t the most precise on the iPhone and iPad because these devices don’t have high-end tracking cameras. Rather, iPhones and iPads use front cameras and on-device intelligence to track your eye movements.

Talking about the good things first. It’s easy to set up eye tracking on an iPhone and it works pretty fast. It’s fun to use eye tracking on an iPhone, but it isn’t perfect or flawless. Sometimes, an iPhone fails to highlight the item I’m currently looking at. Therefore, I often find myself struggling and trying different eye and head movements, so the system highlights what I intend to do. This happens most of the time when I’m scrolling through text-rich pages, like the settings app. That said, browsing the home screen and opening photos were pretty accurate. Also, toggling different settings like Bluetooth, Wi-Fi, Airplane mode, and more were quite fast and precise most of the time. That said, the overall experience was kinda average, and nothing impressive.

I used eye tracking on the iPhone 12, iPhone 14 Pro, and iPad Pro M2. Also, I tried eye tracking on iPhone 16 Plus and iPhone 16 Pro Max when I went to see them in the Apple Store. Compared to iPhones, I feel eye tracking works slightly better on an iPad. However, I feel Apple has a lot to catch up on to make iOS 18 eye tracking an impressive feature.

Here are a few suggestions to make the most out of Eye Tracking on iPhones and iPads:

- Keep your iPhone/iPad on a stable surface and further away (about 1.5 feet) from your face. If your device is too close to your face, eye tracking won’t work properly.

- If you’re holding the device in your hand, make sure to hold it as still as possible. If you move away from the device or change your seat, you might need to recalibrate.

- If eye tracking feels off, turn the feature off and set it up again.

- Make sure to sit in a well-lit space. Eye Tracking may not work properly in dark or poorly lit spaces.

- Avoid sitting in front of a light source. It might wash out your face and make it difficult for the camera to see and track your eyes.

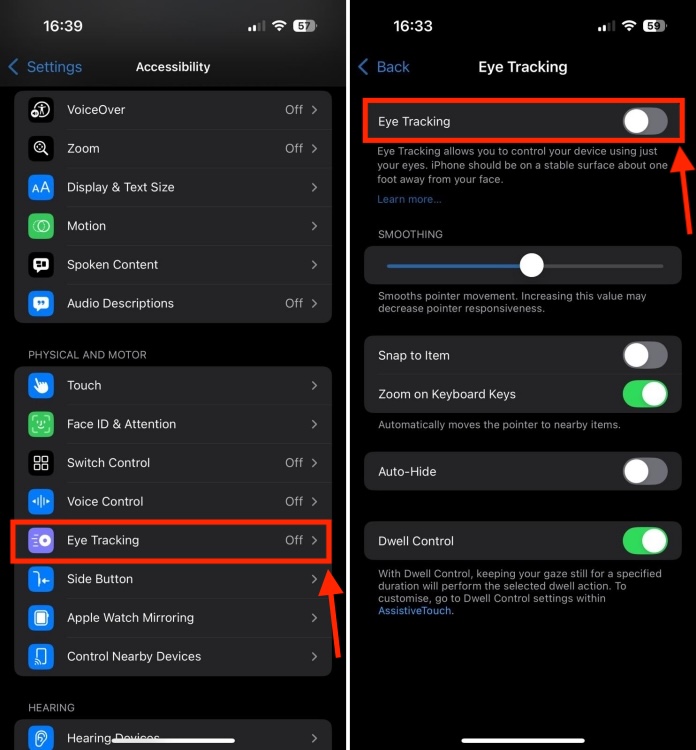

Turn Off Eye Tracking on iPhone

There are two ways to turn off eye tracking on an iPhone. We’ve discussed both of them below:

- Go to Settings -> Accessibility -> Eye Tracking, and turn off the Eye Tracking toggle. From the pop-up, hit OK to confirm your decision.

- Alternatively, you can also add the Eye Tracking button in the customizable Control Center on iOS 18. Once you’ve added the control, you can tap on it to turn on/off iPhone Eye Tracking right from the Control Center.

That’s how you can use eye tracking on iPhones. Have you tried this feature? How did it work for you? Tell us in the comments below.

With the latest iOS 18, iPhone 12 and later can do eye tracking. The brand-new Eye Tracking feature in iOS 18 allows users to navigate their iPhones with their eyes.

It’s worth knowing that eye tracking is not available on all iOS 18-supported devices. Only iPhone SE 3 and iPhone 12 or later models have eye tracking feature.

Eye Tracking is available in the Accessibility section of your iPhone settings. Go to Settings -> Accessibility -> Eye Tracking.

Yes, the iPhone 13 supports Apple’s eye tracking.

No, you can’t use iPhone Mirroring with Eye Tracking. That’s because iPhone Mirroring only works when your iPhone is locked and not in use. Whereas, when you enable Eye Tracking on your iPhone, the front camera is always in use. Also, eye tracking stays active even when you lock your iPhone.

Open Settings -> Accessibility -> Eye Tracking, and turn off the Eye Tracking toggle.

Honkai Star Rail Hexanexus Event Puzzle Guide (Easy & Hard Modes)

Is Eden Brolin Coming Back For Yellowstone Season 5 Part 2?

Here Are All The New Features In Android 15